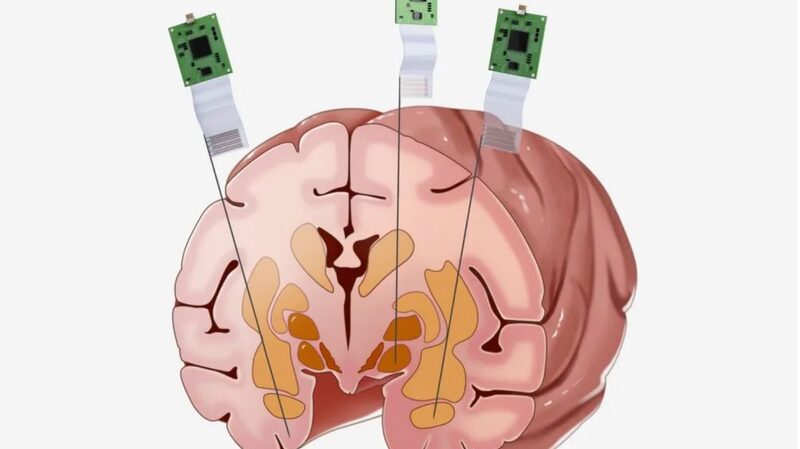

Researchers from Israel and the U.S. have uncovered a groundbreaking parallel between human cognition and artificial intelligence, revealing that both systems process language through remarkably similar sequential mechanisms. The study, published this week in Nature Communications, demonstrates how neural activity in the brain’s language centers follows a step-by-step pattern akin to the operations of Large Language Models (LLMs) like those powering modern AI tools.

Led by teams at the Hebrew University of Jerusalem and U.S. institutions, the research analyzed brain scans of participants listening to spoken narratives. The observed neural processing hierarchy – from basic sound recognition to complex semantic interpretation – directly mirrored the layered architecture of LLMs. This discovery, while not suggesting identical structures, highlights convergent evolutionary and technological approaches to deriving meaning from language.

Dr. Sarah Cohen, a computational neuroscientist involved in the project, noted: “This isn’t about brains being computers, but rather about shared strategies for solving the universal challenge of language comprehension.” The findings could accelerate developments in both AI and neurological research, potentially improving language disorder treatments and more biologically inspired machine learning systems.

Reference(s):

cgtn.com