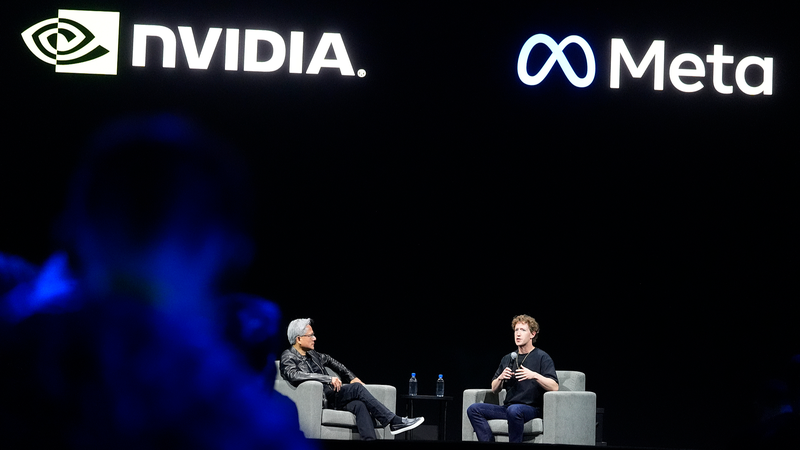

Nvidia announced on February 18, 2026, a landmark agreement to supply Meta Platforms with millions of artificial intelligence chips over multiple years. The deal includes current-generation Blackwell AI accelerators and next-generation Rubin processors scheduled for future release, alongside standalone installations of Grace and Vera central processing units (CPUs).

The partnership marks a strategic expansion for Nvidia into emerging AI applications such as autonomous agent systems, while challenging traditional CPU manufacturers like Intel and AMD. Meta's adoption of Nvidia's Arm-based Grace CPUs follows reported 50% energy efficiency gains for database operations compared to conventional solutions, with further improvements anticipated from the Vera architecture.

This development occurs as Meta simultaneously develops proprietary AI chips and explores collaboration with Google's Tensor Processing Units. Industry analysts suggest the deal reinforces Nvidia's dominance in AI infrastructure, with Meta likely among the four major clients contributing to 61% of Nvidia's Q1 2026 revenue.

Ian Buck, Nvidia's hyperscale computing lead, emphasized the technological leap: "Our Grace processors are redefining power efficiency benchmarks. With Vera's upcoming deployment, we're enabling sustainable AI scaling across industries."

The agreement underscores intensifying competition in AI hardware development, particularly relevant to Asian tech manufacturers and investors monitoring semiconductor supply chain dynamics.

Reference(s):

cgtn.com