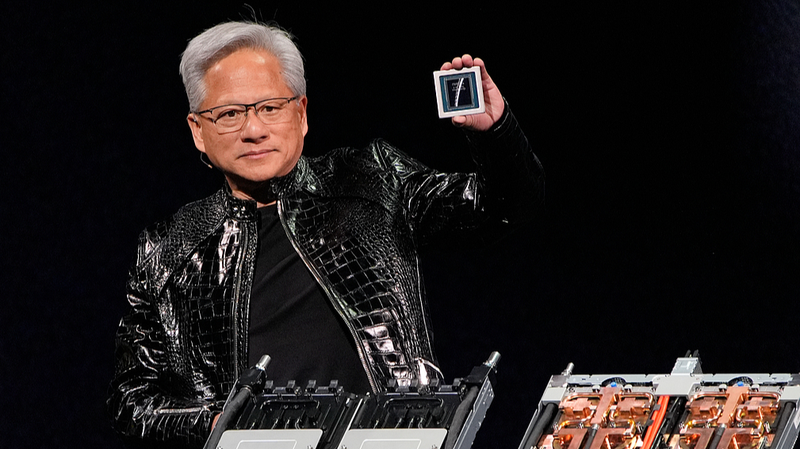

Nvidia CEO Jensen Huang announced a technological breakthrough at CES 2026 in Las Vegas, revealing full production of the company's Vera Rubin AI chip platform that promises to redefine artificial intelligence capabilities. The new architecture delivers five times the computing power of previous models for chatbot operations and AI applications, marking one of the most significant performance jumps in semiconductor history.

Next-Gen Architecture

The Vera Rubin platform combines six specialized chips into modular "pods" capable of housing over 1,000 graphics units. Each flagship server will contain 72 graphics processors and 36 new central processors, enabling 10x efficiency improvements in generating AI tokens – the fundamental building blocks of machine learning systems.

Competitive Landscape

While maintaining dominance in AI training systems, Nvidia faces growing challenges from traditional rivals like AMD and cloud partners developing in-house solutions. The company's new co-packaged optics networking technology and "context memory storage" layer aim to maintain leadership in serving AI applications at scale, competing directly with Broadcom and Cisco infrastructure solutions.

Industry Adoption

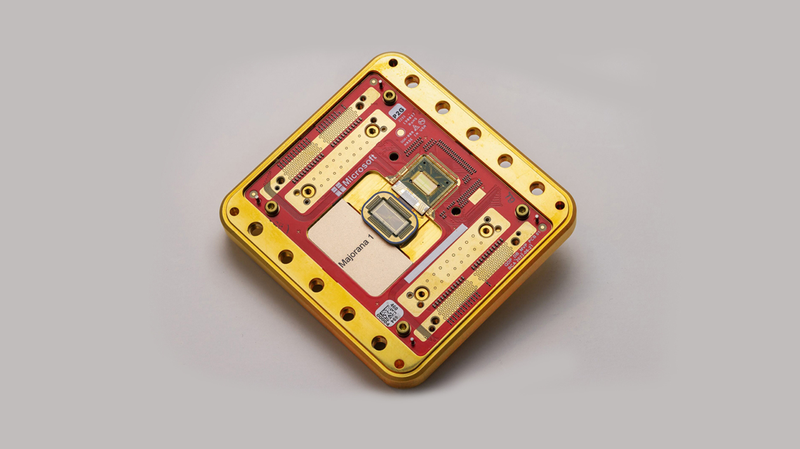

CoreWeave will be among first adopters of Vera Rubin systems, with Microsoft, Oracle, Amazon, and Alphabet expected to follow. Huang emphasized open-source principles by announcing the release of Alpamayo autonomous vehicle software training data, originally developed in late 2025, to foster transparency in AI decision-making processes.

Strategic Moves

Recent acquisitions including AI chip startup Groq position Nvidia to expand its product lineup, though Huang assured analysts these moves won't disrupt core operations. The company continues balancing partnerships with tech giants while developing proprietary technologies like the Rubin chips' specialized data format – a standard Nvidia hopes will become industry-wide.

Reference(s):

Nvidia CEO Huang says next generation of chips in full production

cgtn.com