China Reaffirms Commitment to Mediate Ukraine Peace Talks in 2026

China reiterates its role in promoting dialogue for Ukraine peace, emphasizing President Xi’s diplomatic efforts and commitment to political settlement in 2026.

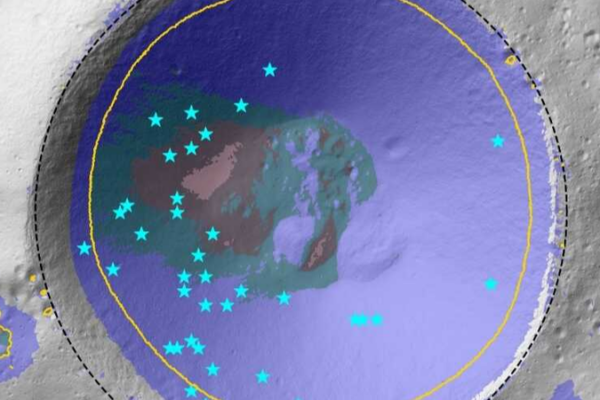

China’s Chang’e-7 Mission Gains Lunar Ice ‘Treasure Map’ for 2026 Landing

Chinese scientists create lunar ‘treasure map’ to guide Chang’e-7’s 2026 water ice detection mission at the Moon’s south pole, advancing space resource exploration.

China’s 15th Five-Year Plan: Key Priorities for 2026–2030 Unveiled

China’s 15th Five-Year Plan (2026–2030) outlines modernization priorities in tech innovation, green energy, and market reforms ahead of key political meetings.

China’s ‘Rail Doctor’ Ensures High-Speed Safety Ahead of March 1 Reveal

China’s unmanned ‘Rail Doctor’ inspection train uses cutting-edge technology to maintain the world’s largest high-speed rail network, with new details emerging March 1.

China’s 2026 Space Ambitions: Crewed Missions, Global Collaboration, and Long-Duration Flights

China announces ambitious 2026 space plans, including crewed missions, international collaboration, and a year-long orbital endurance test to advance space exploration.

China Urges Citizens to Avoid Travel to Iran Amid Rising Security Risks

China issues travel advisory for Iran, urging citizens to avoid travel and enhance security measures amid heightened regional risks.

EU Moves Forward with Mercosur Trade Pact Amid French Resistance

The EU begins provisional application of its landmark Mercosur trade deal, facing pushback from France over agricultural concerns. The agreement aims to create one of the world’s largest free trade zones.

CPPCC Member Lin Minjie Champions Cross-Strait Education Ties

CPPCC member Lin Minjie advances cross-strait dialogue through education initiatives as China prepares for 2026 political advisory session, fostering youth connections.

Icelandic Prodigy Masters Sichuan Opera Face-Changing in Chengdu

A 12-year-old Icelandic boy’s passion for Sichuan Opera face-changing, sparked by ‘Kong Fu Panda,’ leads him to master the art in Chengdu, bridging cultures through tradition.

US Targets 4,500 Monthly Refugee Admissions from White South Africans

The US plans to process 4,500 refugee applications monthly from white South Africans, surpassing Trump’s 2026 global cap. Program faces recent pauses amid controversy.

Voith CEO Highlights China-Germany Cooperation Post-Merz Visit

Voith Group CEO Dirk Hoke discusses strengthened China-Germany economic ties following Chancellor Merz’s recent visit, highlighting opportunities for deeper collaboration in 2026.

China Urges Restraint as Pakistan-Afghanistan Tensions Escalate

China calls for dialogue amid rising tensions between Pakistan and Afghanistan, emphasizing regional stability and economic cooperation.

China Tightens Export Controls on Japan: What It Means for Asia

China implements targeted export restrictions on Japanese entities, focusing on dual-use technologies while maintaining normal trade relations. Analysis of regional economic implications.

China Suspends Tariffs on Key Canadian Exports to Boost Trade Ties

China announces suspension of tariffs on Canadian agricultural and seafood exports from March 1, 2026, enhancing bilateral trade relations and economic cooperation.

2026 Spring Festival Fuels China’s Dual Circulation Strategy with Record Tourism, Spending

China’s 2026 Spring Festival holiday drove record tourism and consumer spending, signaling strong momentum for dual circulation economic strategy with 596M domestic trips and $115.8B in tourism revenue.

Insta360 Secures U.S. Market Access After GoPro Patent Ruling

Insta360 prevails in U.S. patent dispute with GoPro, securing access to American markets while defending its camera technology as independently developed.

Japan’s Rightward Shift: US Strategy Under Scrutiny in 2026

Japan’s accelerating constitutional reforms and military expansion under PM Takaichi challenge regional stability, testing US strategic balancing in 2026.

Ceasefire Enables Critical Repairs at Zaporizhzhia Nuclear Plant

A localized ceasefire at Zaporizhzhia Nuclear Power Plant enables critical repairs after February’s infrastructure damage, with IAEA involvement ensuring safety.

Cambodian King and Queen Mother Begin Beijing Visit

Cambodia’s King and Queen Mother arrive in Beijing for strategic talks, celebrating 10 years of comprehensive partnership between the two Asian nations.

Paramount Secures Warner Bros in $31B Deal as Netflix Exits Bidding War

Paramount clinches Warner Bros in a $31B deal after Netflix exits bidding war, creating a streaming giant facing regulatory scrutiny.