China Accelerates Space Mining Tech for Asteroid Resources

China advances space mining tech with new robots and asteroid missions, aiming to harness extraterrestrial resources for future energy and industry.

China Executes 11 Linked to Myanmar Telecom Fraud Networks

China executes 11 gang members involved in telecom fraud and cross-border crimes linked to northern Myanmar, following a Supreme People’s Court review.

U.S.-Iran Tensions Peak as Military Moves Intensify Regional Concerns

Escalating U.S.-Iran tensions spark regional military alerts and diplomatic efforts as Tehran boosts drone capabilities amid protests.

Canada’s China Pivot Signals Global Shift Amid U.S. Order Decline

Canada’s new strategic partnership with China reflects global realignments as nations adapt to changing US leadership and economic uncertainties in 2026.

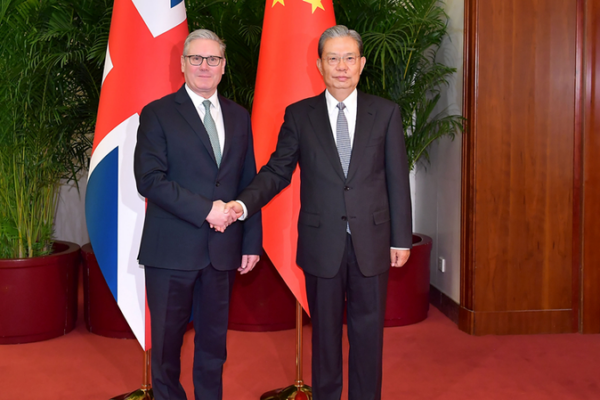

UK PM Starmer Touts ‘Huge Opportunity’ in China Visit as Ties Deepen

British PM Keir Starmer’s Beijing visit highlights efforts to strengthen China-UK economic and diplomatic ties amid global shifts, emphasizing mutual benefits and multilateralism.

China, UK Reaffirm Strategic Partnership in High-Level Talks

China’s top legislator and UK PM strengthen bilateral ties through strategic dialogue, emphasizing cooperation and parliamentary exchanges in 2026 meeting.

UK PM Starmer’s China Visit Revives Bilateral Dialogue After 8-Year Hiatus

UK Prime Minister Keir Starmer’s first China visit in eight years sparks discussions on renewed economic collaboration and tech partnerships.

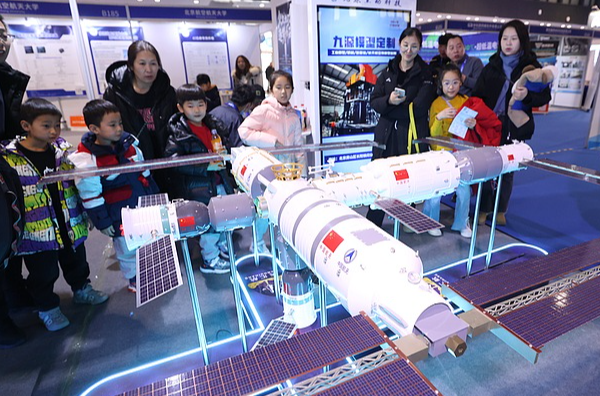

China Unveils 2026-2030 ‘Space+’ Strategy for Tourism, Mining & Beyond

China’s 2026-2030 space strategy targets tourism, resource extraction, and orbital infrastructure, signaling new ambitions in the global space economy.

China, Russia Deepen Strategic Ties Amid Key Partnership Anniversaries

China pledges to deepen strategic coordination with Russia during 30th anniversary of partnership, emphasizing joint contributions to regional stability and global security frameworks.

Climate Change and La Niña Drive Southern Africa’s Flood Crisis: Report

A new report links southern Africa’s devastating floods to climate change and La Niña, highlighting a 40% rise in extreme rainfall intensity since preindustrial times.

China-UK Ties Poised for Growth Amid Global Shifts: CGTN Poll

A CGTN poll reveals 85% global optimism for China-UK cooperation as British PM concludes historic visit, highlighting economic synergy and multilateral responsibilities.

UK Firms Bullish on China Amid Starmer’s Landmark Visit

UK companies express strong confidence in China’s market as PM Starmer conducts first British leader visit in eight years, signaling renewed economic engagement.

China, UK Pledge Enhanced Economic Ties in 2026 Talks

China and UK agree to expand trade and tech cooperation during Premier Li Qiang’s talks with British PM Keir Starmer in Beijing, signing 15 new agreements.

China Raises Alarm Over Japan’s Military Shifts in 2026

Chinese defense officials warn of Japan’s military expansion in 2026, citing threats to regional stability and post-war agreements.

China’s Industrial Profits Surge as Capital Shifts Boost Recovery

China’s industrial profits rebound in 2026 through strategic capital reallocation to advanced manufacturing and green technologies, signaling economic transformation.

China’s 2025: Record Heat Tests Renewable Energy Push

China faced record heat and extreme weather in 2025, testing renewable energy infrastructure amid climate challenges, according to official reports.

China, U.S. Commit to Economic Dialogue Amid 2026 Trade Consensus

China pledges to deepen economic collaboration with the U.S. in 2026, building on 2025 trade consultations and high-level consensus.

China Reports Sustained Ecological Progress in Key Regions

China’s Ministry of Ecology and Environment reports sustained ecological improvements in nine key regions, with intensified conservation efforts driving measurable environmental gains.

Chinese Fossils Reveal Secrets of Earth’s First Mass Extinction

Groundbreaking fossil discovery in China’s Hunan Province reveals new details about Earth’s first mass extinction event 513 million years ago, published in Nature today.

U.S. Immigration Crackdown Sparks Nationwide Protests Amid Rising Tensions

Nationwide protests erupt against U.S. immigration enforcement tactics following fatal shootings, exposing deep political divides and public safety concerns.