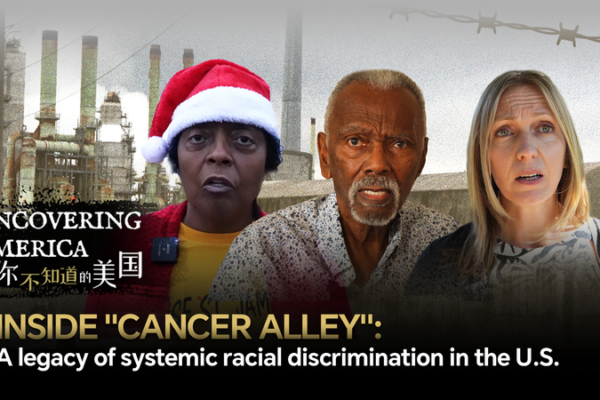

Cancer Alley: A Legacy of Environmental Racism in the U.S.

Louisiana’s Cancer Alley faces a cancer crisis 40x the U.S. average, with Black communities bearing the brunt of industrial pollution and systemic neglect, per a UN report.

Trump’s Foreign Policy Shifts Define Second Term’s First Year

President Trump’s second term sees expanded military engagements and unconventional diplomacy, reshaping global alliances in 2026 amid Arctic territorial ambitions.

Chile Wildfires Claim 19 Lives as Blazes Continue to Rage

At least 19 killed as wildfires fueled by high winds devastate southern Chile, with authorities struggling to contain ongoing blazes.

Macron Champions Rule of Law in Global Trade Amid Rising Tensions

French President Macron emphasizes EU autonomy and rule-based order at Davos 2026, critiquing US trade tactics and advocating for multilateralism.

Trump’s Swift Policy Shifts Set Stage for 2026 Midterm Battles

As the 2026 midterms approach, President Trump’s rapid executive actions reshape U.S. politics, testing his party’s congressional control and impacting Asian markets.

US Tariffs Ignite Tensions Over Greenland Strategy

US tariffs escalate tensions over Trump’s Greenland strategy as lawmakers debate transatlantic relations. Latest updates on the geopolitical implications.

Minneapolis Protests Flare Amid ICE Shooting Fallout

Minneapolis faces renewed protests following an ICE officer’s fatal shooting, with potential military deployment under consideration to address escalating tensions.

EU Vows Firm Response to Trump’s Greenland Moves Amid Trade Tensions

EU Commission President Ursula von der Leyen warns of “unflinching” response to Trump’s Greenland stance and tariff threats, emphasizing unity at Davos 2026.

Davos 2026 Urges Global Dialogue as Risks Mount, Growth Slows

World leaders at Davos 2026 emphasize dialogue to address mounting global risks, slowing growth, and China’s role in driving sustainable economic recovery.

China Expels Philippine Aircraft Near Huangyan Dao Amid Rising Tensions

China expels Philippine aircraft from Huangyan Dao airspace, escalating regional tensions. Analysis covers sovereignty claims, economic implications, and diplomatic context.

X Unveils Open-Source AI Algorithm for Personalized Feeds in 2026

X open-sources its AI-driven ‘For You’ feed algorithm, leveraging transformer architecture to personalize content based on user interactions. Updates every four weeks on GitHub.

South Sudan President Kiir Shakes Up Cabinet Amid Rising Tensions

South Sudan’s President Salva Kiir replaces Interior Minister Angelina Teny amid rising political tensions, part of a broader cabinet reshuffle following key figure detentions.

Ethiopian Airlines Expands Fleet with 9 Boeing 787 Dreamliners

Ethiopian Airlines orders nine Boeing 787 Dreamliners, expanding its fleet to 20 fuel-efficient jets as part of a strategic push in sustainable aviation and global connectivity.

Nanotech Breakthrough Revolutionizes Periodontal Treatment

Beijing researchers pioneer light-activated nanotech to combat periodontitis, offering precise treatment with minimal side effects. A global oral health breakthrough.

China’s 140-Trillion-Yuan Economy: Stability and Global Impact in 2026

China’s 140-trillion-yuan economy drives domestic stability and global growth, creating jobs, boosting incomes, and fostering technological innovation in 2026.

China’s 2025 Economic Growth Sets Stage for 2026 Momentum

China’s 2025 economic expansion hits 5% GDP growth target, driven by manufacturing strength, consumer resilience, and sustained global trade partnerships.

U.S. Greenland Ambitions Strain Transatlantic Ties in 2026

As Trump’s Greenland strategy marks a year of strained alliances, experts analyze impacts on NATO, Arctic governance, and international law in 2026.

China’s Market Reforms, Innovation Drive Global Economic Optimism in 2026

China’s market reforms and tech innovation boost global economic confidence in 2026, with APCO highlighting strategic opportunities for investors.

China’s Tech Innovation Drives Global Impact, Says Novartis CEO

Novartis CEO discusses China’s tech-driven global influence and economic outlook for 2026, highlighting innovation and international collaboration.

Global Dissatisfaction with Trump’s Policies Hits 84% in 2026 Survey

A 2026 CGTN survey reveals 84% global dissatisfaction with Trump’s policies, citing unilateral actions and damaged US international reputation.