Addis Ababa’s Urban Revamp Boosts Livability and Tourism

Addis Ababa’s Corridor and Riverside projects transform the city, enhancing livability and attracting tourists as of 2026.

Xi Jinping Outlines Vision for China’s Financial Strength in Qiushi Journal Article

President Xi Jinping’s upcoming Qiushi Journal article outlines China’s roadmap for developing financial strength with Chinese characteristics, set to influence 2026 economic strategies.

Tunisia Extends State of Emergency Through 2026 Amid Security Concerns

Tunisia extends nationwide state of emergency through 2026, maintaining security measures first imposed after 2015 terrorist attack. Decree grants expanded government powers.

CMG Nears Final Prep for 2026 Spring Festival Gala with Multi-City Rehearsals

China Media Group completes third rehearsal for 2026 Spring Festival Gala, featuring debut sub-venues in four cities and a vibrant cultural showcase.

U.S. Residents Voice Concerns Over Iran Military Buildup as Tensions Simmer

Iran seeks dignified talks with the U.S. amid military tensions, while New York residents express mixed reactions to escalating deployments in the Middle East.

Suspected Boko Haram Attack in NE Nigeria Leaves Dozens Dead

Suspected Boko Haram militants attacked laborers in Nigeria’s Borno state, leaving up to 20 dead. The assault highlights ongoing security challenges in the region.

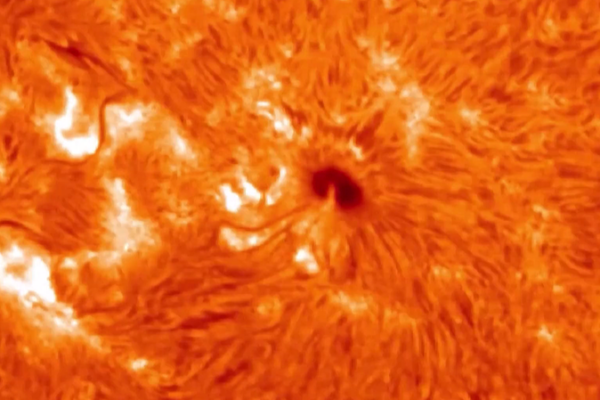

China to Pioneer Solar Research with Xihe-2 Probe at Sun-Earth L5 Point

China announces plans to launch Xihe-2, the world’s first solar probe to the Sun-Earth L5 point, enhancing space weather forecasting by 2028-2029.

Iran Boosts Military Readiness Amid Rising US Presence in Middle East

Iran’s military declares full combat readiness amid heightened US military deployments in the Middle East, vowing a decisive response to any aggression.

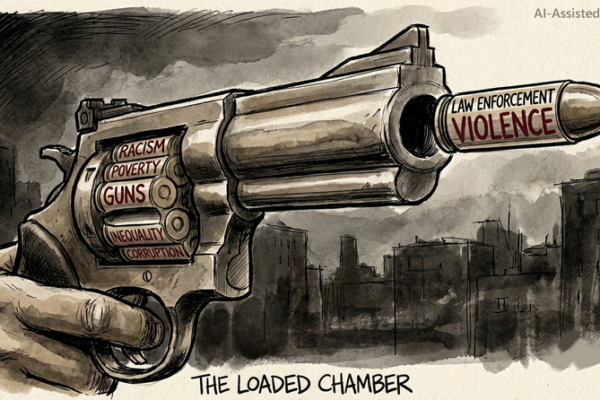

U.S. Law Enforcement Violence: A Cycle Rooted in History

Recent Minneapolis police shooting highlights systemic issues in U.S. law enforcement, rooted in historical racism, poverty, and gun proliferation.

Sudan’s Burhan Dismisses Chemical Weapons Claims, Rejects Hamdok’s Political Return

Sudan’s military leader denies chemical weapons use and rejects former PM Hamdok’s political comeback amid ongoing conflict, urging displaced citizens to return ahead of Ramadan.

Over 200 Dead in Eastern DR Congo Mine Collapse, Global Tantalum Supply Impacted

Over 200 artisanal miners killed in coltan mine collapses in rebel-held eastern DR Congo, threatening global tantalum supplies and highlighting dire working conditions.

Xi Urges Innovation Surge in Future Industries for 2026 Growth

Chinese President Xi Jinping calls for accelerated innovation in AI, quantum tech, and green energy to drive economic growth in 2026.

China, Algeria Celebrate Joint Satellite Launch Success

China and Algeria strengthen space cooperation with successful satellite launch, enhancing Earth observation capabilities for sustainable development in North Africa.

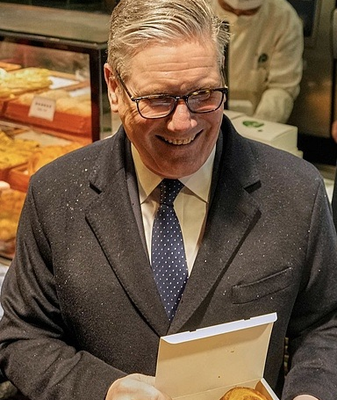

UK PM Starmer Explores Shanghai’s Yuyuan Garden, Highlights Cultural Ties

UK Prime Minister Keir Starmer concludes China visit with cultural stop at Shanghai’s historic Yuyuan Garden, echoing Queen Elizabeth II’s 1986 diplomatic gesture.

Trump’s 2026 China Strategy Balances Deterrence with Pragmatism

The U.S. 2026 National Defense Strategy adopts a pragmatic stance on China, balancing deterrence with managed competition while urging allies to bolster regional security.

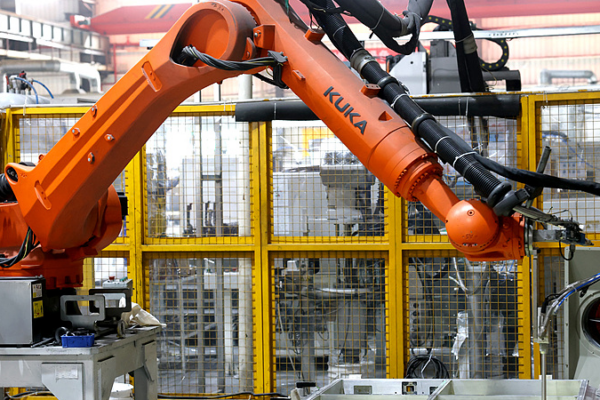

China’s Manufacturing PMI Dips in January 2026 Amid Seasonal Slowdown

China’s manufacturing PMI fell to 49.3 in January 2026, reflecting seasonal pressures, though high-tech sectors and large enterprises show resilience amid rising costs.

China-UK Strengthen Ties Through Shared Values, Trade Deals

UK PM Starmer’s China visit yields four new trade agreements as business leaders emphasize shared economic values and sustainable development cooperation.

Cuba’s Energy Crisis Deepens Amid U.S. Tariff Threats

Cuba faces mounting energy crisis as U.S. pressure disrupts oil imports, with experts warning of cascading economic and social impacts across the Caribbean region.

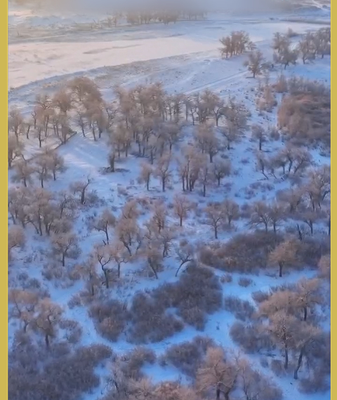

Snowfall Transforms Inner Mongolia’s Sacred Poplars into Winter Wonderland

Centuries-old Euphrates poplars in Ejina Banner, Inner Mongolia, become a breathtaking winter spectacle after recent snowfall, blending natural beauty with cultural reverence.

Chinese Puppetry Bridges Cultures in Chile Ahead of Spring Festival 2026

A Chinese puppet troupe’s performance in Chile highlights cultural ties ahead of the 2026 Spring Festival, blending tradition with cross-continental storytelling.