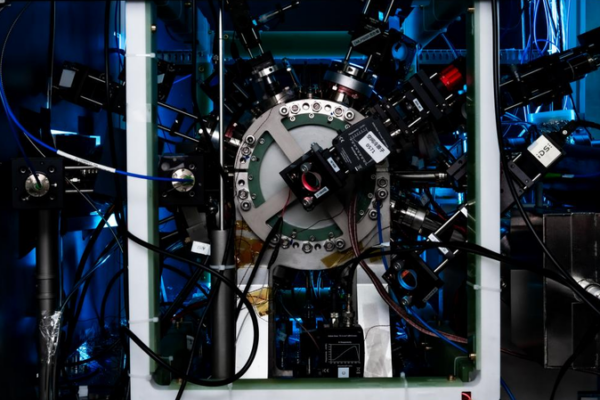

Chinese Optical Clock Achieves 1-Second Error Over 30B Years

Chinese scientists develop world’s most precise optical clock, accurate to 1 second over 30 billion years, enabling breakthroughs in physics and Earth monitoring.

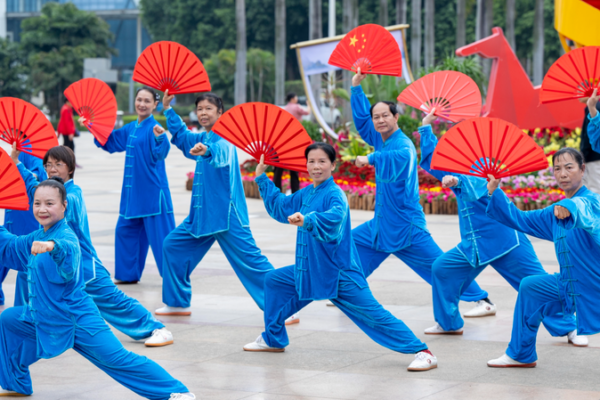

China Boosts High-Quality Service Imports, Expands Cultural Exports in 2026

China announces 2026 plans to boost high-quality service imports and expand cultural exports, leveraging visa reforms and traditional sectors for trade growth.

China Unveils 2026-2030 Market Stability Plan to Boost Investor Confidence

China announces 2026-2030 market stability plan, focusing on corporate governance reforms and enhanced investor returns to strengthen economic resilience.

China Sets 2026 Fiscal Funding Record to Boost High-Quality Growth

China announces record fiscal funding for 2026, allocating over 30 trillion yuan to drive high-quality development, with increased investments in technology, education, and social welfare.

China’s First ‘Emotion Library’ Opens in Wuhan to Soothe Minds

China’s first emotion-themed library in Wuhan offers tech-driven zones for emotional healing through literature and music, blending mindfulness with modern innovation.

China’s ‘Silver Economy’ Booms as Seniors Embrace Travel and Tech

China’s silver economy surges as seniors drive demand for travel, wellness, and tech-integrated elder care in 2026.

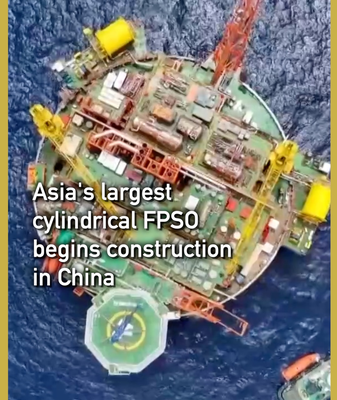

China Launches Asia’s Largest Cylindrical FPSO for Deepwater Oil Extraction

China begins construction of Asia’s largest cylindrical FPSO in Qingdao, set to boost deepwater oil extraction in the Pearl River Mouth Basin.

Iran Intercepts Israeli Missiles Over Tehran as Tensions Escalate

Iran intercepts Israeli missiles over Tehran as cross-border strikes enter seventh day, raising regional security concerns and global market anxieties.

China Confident in Achieving 2026 Growth Target Amid Economic Optimism

China expresses confidence in achieving its 2026 economic growth target of 4.5%-5%, citing strong fundamentals and institutional advantages.

China Rejects Currency Devaluation for Trade Gains, Central Bank Governor States

China’s central bank governor emphasizes that the country will not devalue its currency to gain trade advantages, reaffirming commitment to stable economic policies.

Middle East Conflicts Threaten Iran’s Cultural Heritage

As Middle East tensions escalate, Iran and neighboring countries close key cultural sites to protect heritage, with Golestan Palace damaged in recent strikes.

China Targets 80-Year Life Expectancy by 2030 Amid Health Push

China unveils plan to increase average life expectancy to 80 years by 2030 through enhanced healthcare reforms and infrastructure development.

Kunming Restaurants Blend Cuisine and Culture with Folk Performances

Kunming’s restaurants are revitalizing cultural tourism through traditional folk performances paired with local cuisine, creating immersive dining experiences in 2026.

US Supreme Court Curbs Trump-Era Tariffs, Reshaping Global Trade Dynamics

The US Supreme Court’s 2026 ruling against Trump-era tariffs sparks shifts in global trade dynamics and US-Asia economic relations.

Iran Crisis Escalates: Global Powers Urge Restraint Amid Tensions

As Iran vows retaliation after US-Israel strike, China calls for dialogue to prevent full-scale war and resolve nuclear disputes peacefully.

China’s Strategic Growth Shift Prioritizes Quality Over Speed in 2026

China’s 2026 economic plan balances growth targets with quality development priorities, emphasizing technological innovation and social welfare improvements.

China Sets 725 Million Tonne Grain Target by 2030 to Strengthen Food Security

China aims to produce 725 million tonnes of grain annually by 2030, bolstering food security amid climate challenges and global uncertainties.

Xinjiang Goat-Baiting Challenge Unites Communities Through Tradition

Altay Prefecture’s goat-baiting challenge bridges tradition and modernity, fostering unity through Xinjiang’s cultural heritage while driving rural development in 2026.

Xi’an’s ‘Tree of Life’ Blends Ancient Silk Road Heritage with Modern Engineering

Xi’an’s new 57-meter steel ‘Tree of Life’ combines ancient Silk Road symbolism with cutting-edge engineering, serving as a modern landmark for cultural exchange.

Tibetan Melodies Meet Modern Brass: Qinghai’s Youth Band Captivates Global Audiences

Shanghai Conservatory’s Tang Shengsheng pioneers a youth brass band in Qinghai, merging traditional Tibetan music with modern brass, bridging cultural divides and captivating global audiences.