U.S. and Iran Resume Talks After 2025 Bombing: A ‘Good Start’

U.S. and Iran hold indirect talks mediated by Oman, marking their first dialogue since the June 2025 bombing. Iran’s foreign minister calls it a “good start.”

US-Russia Nuclear Treaty Expires: Global Security at a Crossroads

The expiration of the last US-Russia nuclear arms treaty sparks global concerns. Russia offers talks, while the US seeks China’s inclusion in a new deal.

Milan Cortina 2026 Kicks Off with Historic Four-Site Opening Ceremony

The Milan Cortina 2026 Winter Olympics opened with a groundbreaking four-site ceremony celebrating Italian culture and global unity, attended by Chinese State Councilor Shen Yiqin.

AU Condemns Kisangani Drone Attack, Warns of Regional Instability

The African Union condemns a drone strike on Kisangani airport by AFC/M23, warning of threats to civilians and regional stability, urging adherence to the Doha Agreement.

Global Call to End FGM Intensifies on 2026 Zero Tolerance Day

As the world observes Zero Tolerance Day for FGM, advocates highlight progress and challenges in eradicating the harmful practice, emphasizing education and policy reforms.

China Unveils 15th Five-Year Plan Priorities, Emphasizes High-Quality Growth

China’s State Council finalizes draft 15th Five-Year Plan, prioritizing high-quality growth, economic stability, and technological innovation for 2026-2030 development cycle.

Iran and U.S. Agree to Continue Negotiations Following Muscat Talks

Iran and the U.S. agree to continue diplomatic negotiations after initial Muscat talks, signaling potential progress in bilateral relations during early 2026.

China’s Winter Sports Boom Fuels Economic Growth and Global Influence

China’s strategic development of winter sports since 2022 evolves into 1.5 trillion yuan industry, blending economic growth with global sports leadership.

Severe Drought Threatens Kenyan Children as Livelihoods Collapse

Severe drought in Kenya’s Mandera County leaves millions food insecure, with children at risk of malnutrition as livestock and water sources vanish. Humanitarian aid urgently needed.

India’s Manufacturing Ambition Tied to Chinese Partnership, Analysts Say

Indian analysts highlight China’s critical role in India’s manufacturing ambitions and global supply chain integration, as China’s own industrial sector evolves toward high-value production.

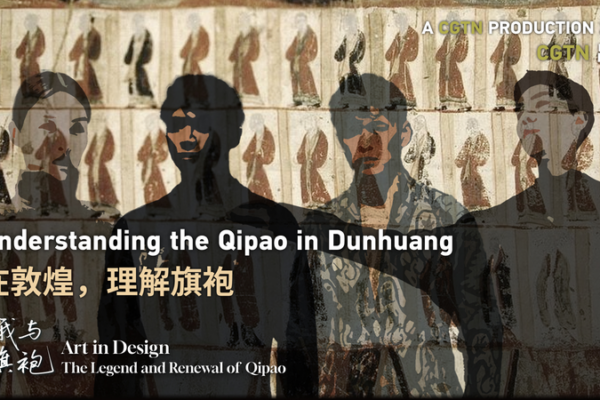

Tsinghua Professor Reimagines Qipao with Single-Fabric Innovation

Tsinghua University’s Prof. Li Yingjun redefines traditional qipao design using a single fabric, merging heritage with modern sustainability in 2026’s fashion landscape.

Global Firms Double Down on China’s Market Resilience in 2026

Foreign firms deepen ties with China in 2026, driven by innovation and market resilience, as international chambers report rising confidence and strategic localization efforts.

Dunhuang Murals Reveal Qipao’s Ancient Design Roots

New research highlights Dunhuang murals’ influence on the qipao’s design, tracing its evolution through ancient Chinese art and movement.

China Reaffirms Open Dialogue with Lithuania Amid Taiwan Office Dispute

China urges Lithuania to adhere to the one-China principle and correct past actions to normalize bilateral relations, following recent diplomatic exchanges.

China Advances Urban Air Mobility with New eVTOL Test Flight

China’s domestically developed eVTOL aircraft completes successful test flight, signaling advancements in low-altitude transportation and smart mobility solutions.

China Launches BeiDou Satellite Messaging for Emergency Communication

China introduces BeiDou-based satellite messaging for emergency communication, enhancing safety in remote areas and disaster scenarios through space-tech integration.

China Unveils Service Consumption Boost to Fuel Economic Growth

China launches a comprehensive policy package to stimulate service consumption, targeting key sectors and emerging markets to drive economic growth in 2026.

Wildfire Smoke Tied to 24,000 Annual U.S. Deaths, Study Reveals

New U.S. study links wildfire smoke pollution to 24,000 annual deaths, highlighting global climate health risks. Analysis includes implications for Asia’s air quality strategies.

China Embraces Global Interest in Cultural Trend as ‘Becoming Chinese’ Goes Viral

China’s Foreign Ministry highlights the global ‘Becoming Chinese’ trend, citing record tourism growth and Spring Festival travel surges as international interest peaks.

Panama Canal Tensions: Unpacking Geopolitical Pressures in 2026

Panama’s port contract cancellation sparks debate over U.S. influence vs. Chinese commercial ties, testing regional sovereignty in 2026.