EU Condemns Trump’s NATO Remarks, Greenland Tensions Escalate

European leaders denounce former U.S. President Trump’s NATO comments and military threats over Greenland, straining transatlantic relations in 2026.

China’s Industrial Profits Rebound in 2025 After Three-Year Slump

China’s large-scale industrial enterprises saw a 0.6% profit growth in 2025, ending a three-year decline, driven by policy support and manufacturing resilience.

Japan’s Early Election Sparks Political Strategy Debate

Japan’s snap election called by PM Takaichi raises questions about political strategy amid economic challenges and regional tensions. Analysis of domestic and international implications.

U.S. Military Buildup in Middle East Sparks Iranian Warning Amid Regional Tensions

U.S. deploys carrier group to Middle East amid rising tensions with Iran, prompting regional security concerns and market watchfulness in January 2026.

The ‘Kill Line’ Phenomenon: America’s Deepening Crisis of Resilience

Exploring the ‘kill line’ phenomenon in the U.S., where systemic fragility leaves millions one crisis away from collapse. Analysis of institutional erosion and its impact on social stability.

Xi Jinping, Finnish PM Orpo Strengthen Ties in Beijing Talks

Chinese President Xi Jinping and Finnish PM Petteri Orpo discussed enhanced cooperation in green tech and Arctic development during their Beijing meeting on January 27, 2026.

China Condemns Japan’s Taiwan Remarks, Urges Historical Reflection

China urges Japan to correct PM Takaichi’s remarks on Taiwan, stressing historical and legal facts of Taiwan’s return to China as part of the post-WWII international order.

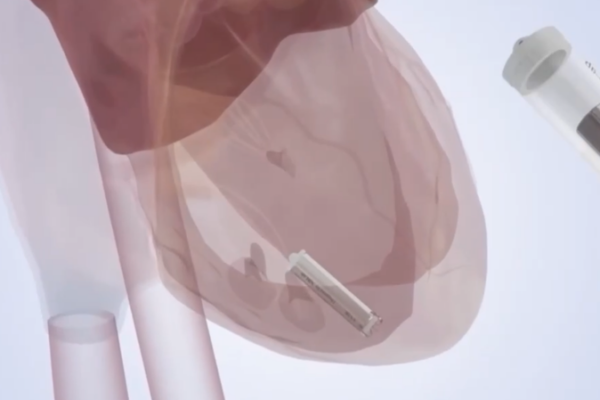

China Unveils Self-Powered Micro Pacemaker, Revolutionizing Cardiac Care

Chinese researchers develop self-powered micro pacemaker that could eliminate replacement surgeries, representing a major advance in implantable medical technology.

China Unveils 286-Strong Squad for Milano Cortina 2026 Winter Olympics

China announces 286-member Winter Olympics squad for 2026 Games, featuring defending champions Gu Ailing and Su Yiming alongside veterans and new talent.

Chinese Scientists Decode Genome of Protected Fish Species, Boost Conservation Hopes

Chinese researchers achieve breakthrough in mapping the genome of Bagarius rutilus, aiding conservation efforts for the endangered species.

Hamas, Israel Confirm Completion of Hostage Returns Under 2025 Ceasefire

Hamas states recovery of last Israeli hostage’s remains fulfills ceasefire terms as Israel confirms all captives returned from Gaza. Tensions persist over agreement compliance.

China Unveils AI-Driven Extreme Weather Forecasting System for 2026

China announces AI-powered weather forecasting system in 2026 to enhance extreme climate event predictions, boosting disaster preparedness.

US Announces Tariff Hike on South Korean Imports Amid Trade Tensions

The US plans to raise tariffs on select South Korean imports to 25%, prompting Seoul to seek urgent talks. Trade tensions escalate in early 2026.

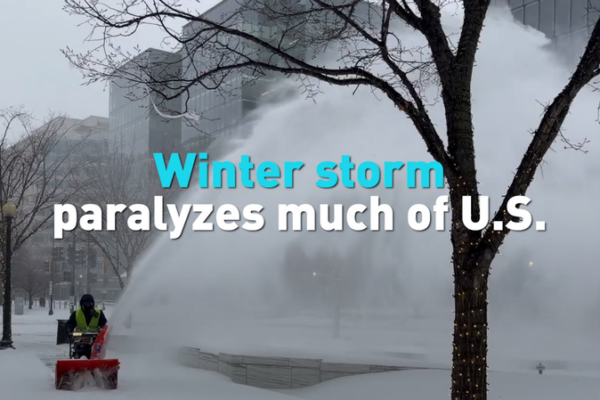

Major Winter Storm Disrupts U.S., Impacts Felt Across Asia-Dependent Sectors

A historic U.S. winter storm disrupts global supply chains and diaspora travel plans, with significant implications for Asian economies and communities worldwide.

U.S. Border Chief to Exit Minneapolis Amid Shooting Fallout

U.S. Border Patrol Chief Gregory Bovino to leave Minneapolis after fatal shooting controversy, as Trump sends new envoy to oversee operations.

U.S. Winter Storm Triggers Power Outages, Travel Disruptions

A major winter storm causes power outages, flight cancellations, and over a dozen fatalities across the eastern U.S., with freezing temperatures persisting this week.

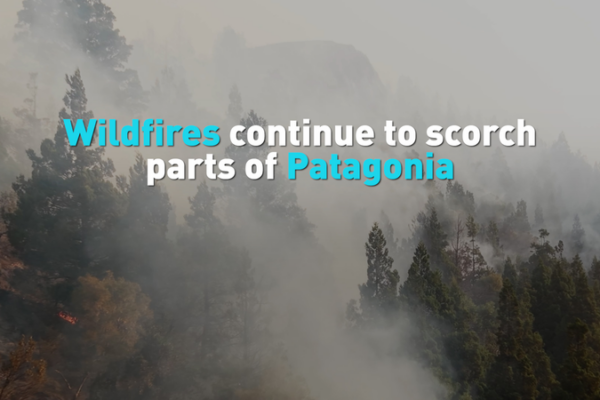

Patagonia Wildfires Threaten Ancient Forests, Ecosystems in 2026

Wildfires in Patagonia have consumed over 30,000 hectares in Argentina, endangering ancient forests and a UNESCO World Heritage site amid extreme weather conditions in 2026.

Winter Storm Disrupts U.S. Travel and Infrastructure, Impacts Felt Across Regions

A severe winter storm sweeps across the U.S., causing widespread travel chaos, power outages, and economic disruptions as temperatures plummet.

Europe’s Oyster Revival: A Blueprint for Coastal Restoration

Conservationists in the UK and Scotland lead groundbreaking efforts to revive Europe’s native flat oyster populations through innovative reef restoration and genetic preservation.

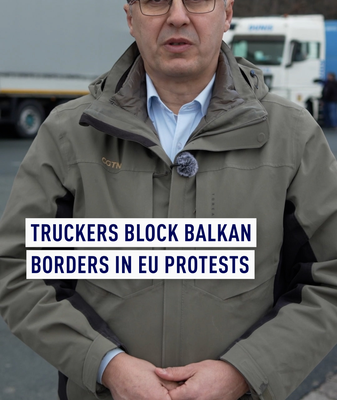

Balkan Truckers Block EU Borders Over Digital Entry Rules

Serbian and Bosnian truck drivers blockade EU borders, protesting digital tracking rules they claim cripple cross-border freight operations.