China, Canada Forge Strategic Partnership at Munich Conference

Chinese and Canadian foreign ministers commit to advancing bilateral ties through new strategic partnerships and enhanced cooperation during Munich Security Conference talks.

China, Norway Strengthen Ties at Munich Security Conference 2026

Chinese and Norwegian foreign ministers emphasize multilateral cooperation and stable bilateral relations during key security conference meetings in Germany.

CMG’s 2026 Spring Festival Gala Prepares Global Spectacle

China Media Group’s 2026 Spring Festival Gala promises a blend of tradition and innovation, featuring global stars and cutting-edge tech. Premieres tomorrow at 8 p.m. CST.

SpaceX Crew-12 Docks with ISS, Begins 8-Month Science Mission

SpaceX’s Crew-12 begins an 8-month ISS mission with international astronauts conducting cutting-edge research for space exploration and Earth-based medical advancements.

Osaka Stabbing Incident Leaves Multiple Injured; Suspect Flees Scene

Multiple people injured in a stabbing attack in Osaka’s Dotonbori district; suspect remains at large as police investigate.

Rubio-Zelenskyy Talks Highlight Diverging Approaches on Ukraine

U.S.-Ukraine diplomatic talks reveal policy divergences ahead of critical Geneva negotiations on resolving the Russia-Ukraine conflict.

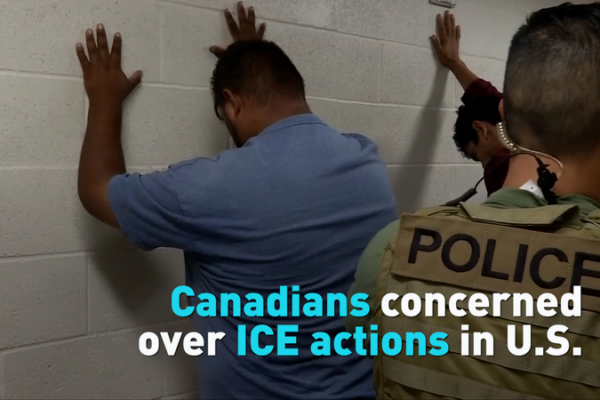

Canada Voices Concerns Over U.S. Immigration Enforcement Tactics

Canada expresses diplomatic concerns regarding recent ICE operations in Minnesota, highlighting cross-border implications and community impacts as enforcement tactics draw scrutiny.

China Champions Multilateralism as Global Challenges Mount, FM Wang Tells Munich Conference

Chinese FM Wang Yi emphasizes China’s commitment to multilateralism and partnership with Europe amid rising global challenges at the Munich Security Conference.

Sudan Advances Digital Governance Amid Ongoing Civil Conflict

Sudan launches Baladna platform to digitize 28 public services amid ongoing conflict, aiming to modernize governance despite infrastructure challenges.

African Union Urges Immediate Halt to Palestinian Suffering in Gaza Crisis

AU Chair Mahmoud Ali Youssouf demands an end to Palestinian suffering in Gaza while addressing continental conflicts at the 39th African Union summit.

China’s Wang Yi Warns Japan Over Taiwan Remarks at Munich Conference

China’s top diplomat warns Japan against militarist rhetoric on Taiwan at global security forum, stressing historical lessons and regional stability.

Inside the CGTN Super Night Gift Box: A Fusion of Culture and Technology

Belarusian actress Anna reveals the 2026 CGTN Super Night gift box contents, blending cutting-edge technology with cultural artifacts to foster global connections.

China Urges Dialogue to Resolve Global Conflicts, Backs Europe’s Role in Peace Talks

Chinese FM Wang Yi emphasizes dialogue over conflict resolution at Munich conference, urging Europe to play proactive role in Russia-Ukraine peace efforts.

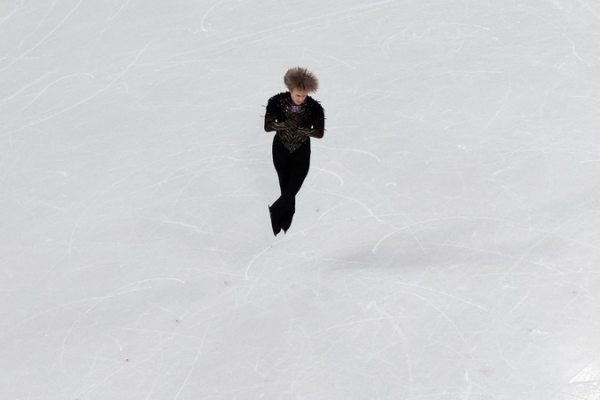

AI and Sensors Redefine Figure Skating at 2026 Winter Olympics

The 2026 Winter Olympics showcase AI and sensor technology revolutionizing figure skating judging and training, turning quadruple jumps into data-driven feats of precision engineering.

China and Germany Strengthen Strategic Partnership at Munich Conference

Chinese and German leaders reaffirm commitment to strategic partnership and economic collaboration during high-level talks at the Munich Security Conference.

China, Czech Republic Seek to Revitalize Strategic Partnership

China and the Czech Republic commit to revitalizing their strategic partnership during high-level talks at the Munich Security Conference, emphasizing mutual interests and the one-China principle.

Wang Yi Urges China-Europe Partnership Over Rivalry at Munich Conference

Chinese FM Wang Yi emphasizes cooperation over competition with Europe at Munich Security Conference, citing $2B daily trade and shared multilateral goals.

Wang Yi Warns Japan Against ‘Turning Back the Clock’ on Taiwan

Chinese Foreign Minister Wang Yi warns Japan against interfering in Taiwan affairs, stating such actions risk self-destruction and regional instability. #AsiaNews

Wang Yi Stresses Cooperation as Key to China-U.S. Relations in Munich Address

Chinese Foreign Minister Wang Yi highlights the potential for China-U.S. cooperation, emphasizing mutual respect and shared global responsibilities at the Munich Security Conference.

Wang Yi Urges Global Governance Reform at Munich Conference

Chinese FM Wang Yi outlines proposals for global governance reform at Munich Security Conference, emphasizing UN revitalization and multilateral cooperation for conflict resolution.